Distributed Coordination With ZooKeeper Part 5: Building a Distributed Lock

Posted on July 10, 2013 by Scott Leberknight

This is the fifth in a series of blogs that introduce Apache ZooKeeper. In the fourth blog, you saw a high-level view of ZooKeeper's architecture and data consistency guarantees. In this blog, we'll use all the knowledge we've gained thus far to implement a distributed lock.

You've now seen how to interact with Apache ZooKeeper and learned about its architecture and consistency model. Let's now use that knowledge to build a distributed lock. The goals are to build a mutually exclusive lock between processes that could be running on different machines, possibly even on different networks or different data centers. This also has the benefit that clients know nothing about each other; they only know they need to use the lock to access some shared resource, and that they should not access it unless they own the lock.

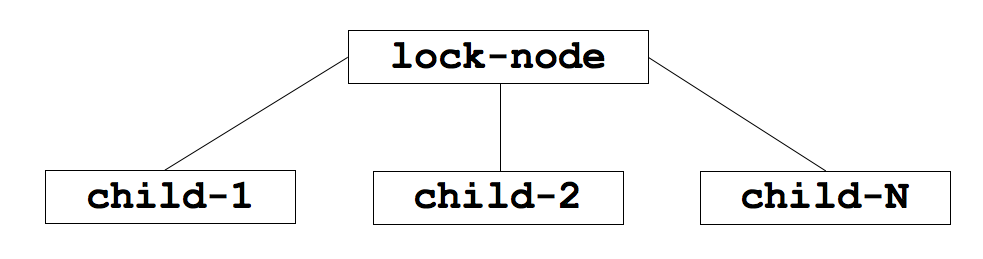

To build the lock, we'll create a persistent znode that will serve as the parent. Clients wishing to obtain the lock will create sequential, ephemeral child znodes under the parent znode. The lock is owned by the client process whose child znode has the lowest sequence number. In Figure 2, there are three children of the lock-node and child-1 owns the lock at this point in time, since it has the lowest sequence number. After child-1 is removed, the lock is relinquished and then the client who owns child-2 owns the lock, and so on.

Figure 2 - Parent lock znode and child znodes

The algorithm for clients to determine if they own the lock is straightforward, on the surface anyway. A client creates a new sequential ephemeral znode under the parent lock znode. The client then gets the children of the lock node and sets a watch on the lock node. If the child znode that the client created has the lowest sequence number, then the lock is acquired, and it can perform whatever actions are necessary with the resource that the lock is protecting. If the child znode it created does not have the lowest sequence number, then wait for the watch to trigger a watch event, then perform the same logic of getting the children, setting a watch, and checking for lock acquisition via the lowest sequence number. The client continues this process until the lock is acquired.

While this doesn't sound too bad there are a few potential gotchas. First, how would the client know that it successfully created the child znode if there is a partial failure (e.g. due to connection loss) during znode creation? The solution is to embed the client ZooKeeper session IDs in the child znode names, for example child-<sessionId>-; a failed-over client that retains the same session (and thus session ID) can easily determine if the child znode was created by looking for its session ID amongst the child znodes. Second, in our earlier algorithm, every client sets a watch on the parent lock znode. But this has the potential to create a "herd effect" - if every client is watching the parent znode, then every client is notified when any changes are made to the children, regardless of whether a client would be able to own the lock. If there are a small number of clients this probably doesn't matter, but if there are a large number it has the potential for a spike in network traffic. For example, the client owning child-9 need only watch the child immediately preceding it, which is most likely child-8 but could be an earlier child if the 8th child znode somehow died. Then, notifications are sent only to the client that can actually take ownership of the lock.

Fortunately for us, ZooKeeper comes with a lock "recipe" in the contrib modules called WriteLock. WriteLock implements a distributed lock using the above algorithm and takes into account partial failure and the herd effect. It uses an asynchronous callback model via a LockListener instance, whose lockAcquired method is called when the lock is acquired and lockReleased method is called when the lock is released. We can build a synchronous lock class on top of WriteLock by blocking until the lock is acquired. Listing 6 shows how we use a CountDownLatch to block until the lockAcquired method is called. (Sample code for this blog is available on GitHub at https://github.com/sleberknight/zookeeper-samples)

Listing 6 - Creating BlockingWriteLock on top of WriteLock

public class BlockingWriteLock {

private String path;

private WriteLock writeLock;

private CountDownLatch signal = new CountDownLatch(1);

public BlockingWriteLock(ZooKeeper zookeeper,

String path, List<ACL> acls) {

this.path = path;

this.writeLock =

new WriteLock(zookeeper, path, acls, new SyncLockListener());

}

public void lock() throws InterruptedException, KeeperException {

writeLock.lock();

signal.await();

}

public void unlock() {

writeLock.unlock();

}

class SyncLockListener implements LockListener {

@Override public void lockAcquired() {

signal.countDown();

}

@Override public void lockReleased() { /* ignored */ }

}

}

You can then use the BlockingWriteLock as shown in Listing 7.

Listing 7 - Using BlockingWriteLock

BlockingWriteLock lock =

new BlockingWriteLock(zooKeeper, path, ZooDefs.Ids.OPEN_ACL_UNSAFE);

try {

lock.lock();

// do something while we own the lock

} catch (Exception ex) {

// handle appropriately

} finally {

lock.unlock();

}

You can take this a step further, wrapping the try/catch/finally logic and creating a class that takes commands which implement an interface. For example, you can create a DistributedLockOperationExecutor class that implements a withLock method that takes a DistributedLockOperation instance as an argument, as shown in Listing 8.

Listing 8 - Wrapping the BlockingWriteLock try/catch/finally logic

DistributedLockOperationExecutor executor =

new DistributedLockOperationExecutor(zooKeeper);

executor.withLock(lockPath, ZooDefs.Ids.OPEN_ACL_UNSAFE,

new DistributedLockOperation() {

@Override public Object execute() {

// do something while we have the lock

}

});

The nice thing about wrapping try/catch/finally logic in DistributedLockOperationExecutor is that when you call withLock you eliminate boilerplate code and you cannot possibly forget to unlock the lock.

Conclusion to Part 5

In this fifth blog on ZooKeeper, you implemented a distributed lock and saw some of the potential problems that should be avoided such as partial failure on connection loss, and the "herd effect". We took our initial distributed lock and cleaned it up a bit, which resulted in a synchronous implementation using the DistributedLockOperationExecutor and DistributedLockOperation which ensures proper connection handling and lock release.

In the next (and final) blog, we'll briefly touch on administration and tuning ZooKeeper and introduce the Apache Curator framework, and finally summarize what we've learned.

References

- Source code for these blogs, https://github.com/sleberknight/zookeeper-samples

- Presentation on ZooKeeper, http://www.slideshare.net/scottleber/apache-zookeeper

- ZooKeeper web site, http://zookeeper.apache.org/

Using the Session ID as the method for working around connection issues during node creation is not ideal. It prevents the same ZK connection from being used in multiple threads for the same lock. It's much better to use a GUID. This is what Curator uses.

Posted by jordan@jordanzimmerman.com on July 11, 2013 at 11:31 AM EDT #

Hi Jordan, thanks for your comment and I totally agree. In the next blog (to be published next week) I mention that people should take a look at Curator and Exhibitor once they understand the basics of ZooKeeper. And if I get some time after that I plan to write a few blogs reworking some of the examples I've used but using Curator, and probably showing that Curator comes with a ton of good production-quality recipes out of the box.

- Scott

Posted by Scott Leberknight on July 11, 2013 at 08:48 PM EDT #